Tracking the Movement of Cyborg Cockroaches

New research from North Carolina State University’s Department of Electrical & Computer Engineering offers insights into how far and how fast cyborg cockroaches – or biobots – move when exploring new spaces. The work moves researchers closer to the …

March 7, 2017 ![]() cjbrown8

cjbrown8

New research from North Carolina State University’s Department of Electrical & Computer Engineering offers insights into how far and how fast cyborg cockroaches – or biobots – move when exploring new spaces. The work moves researchers closer to their goal of using biobots to explore collapsed buildings and other spaces in order to identify survivors.

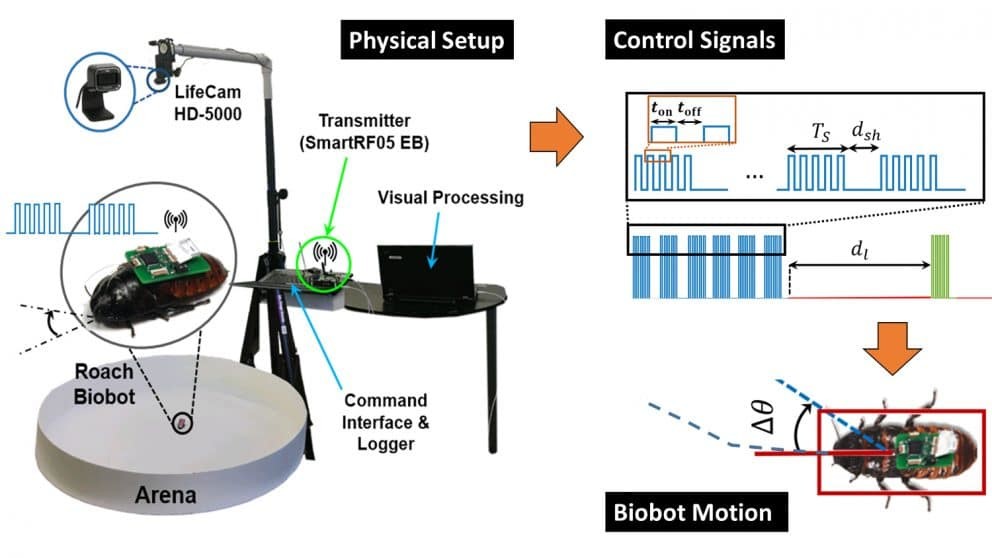

NC State researchers have developed cockroach biobots that can be remotely controlled and carry technology that may be used to map disaster areas and identify survivors in the wake of a calamity.

For this technology to become viable, the researchers needed to answer fundamental questions about how and where the biobots move in unfamiliar territory. Two forthcoming papers address those questions.

The first paper answers questions about whether biobot technology can accurately determine how and whether biobots are moving.

The researchers followed biobot movements visually and compared their actual motion to the motion being reported by the biobot’s inertial measurement units. The study found that the biobot technology was a reliable indicator of how the biobots were moving.

The second paper addresses bigger questions: How far will the biobots travel? How fast? Are biobots more efficient at exploring space when allowed to move without guidance? Or can remote-control commands expedite the process?

These questions are important because the answers could help researchers determine how many biobots they may need to introduce to an area in order to explore it effectively in a given amount of time.

For this study, researchers introduced biobots into a circular structure. Some biobots were allowed to move at will, while others were given random commands to move forward, left or right.

The researchers found that unguided biobots preferred to hug the wall of the circle. But by sending the biobots random commands, the biobots spent more time moving, moved more quickly and were at least five times more likely to move away from the wall and into open space.

“Our earlier studies had shown that we can use neural stimulation to control the direction of a roach and make it go from one point to another,” says Alper Bozkurt, an associate professor of electrical and computer engineering at NC State and co-author of the two papers. “This [second] study shows that by randomly stimulating the roaches we can benefit from their natural walking and instincts to search an unknown area. Their electronic backpacks can initiate these pulses without us seeing where the roaches are and let them autonomously scan a region.”

“This is practical information we can use to get biobots to explore a space more quickly,” says Edgar Lobaton, an assistant professor of electrical and computer engineering at NC State and co-author on the two papers. “That’s especially important when you consider that time is of the essence when you are trying to save lives after a disaster.”

Lead author of the first paper, “A Study on Motion Mode Identification for Cyborg Roaches,” is NC State Ph.D. student Jeremy Cole. The paper was co-authored by Ph.D. student Farrokh Mohammadzadeh, undergraduate Christopher Bollinger, former Ph.D. student Tahmid Latif, Bozkurt and Lobaton.

Lead author of the second paper, “Biobotic Motion and Behavior Analysis in Response to Directional Neurostimulation,” is former NC State Ph.D. student Alireza Dirafzoon. The paper was co-authored by Latif, former Ph.D. student Fengyuan Gong, professor of electrical and computer engineering Mihail Sichitiu, Bozkurt and Lobaton.

Both papers will be presented at the 42nd IEEE International Conference on Acoustics, Speech and Signal Processing, being held March 5-9 in New Orleans.

The work was done with support from the National Science Foundation under grant 1239243.